Bot Tournaments

Welcome to the Reinforce Tactics Bot Tournament page! This page tracks official tournament results between different bot types, showcasing the performance of rule-based bots, LLM-powered bots, and trained RL models.

What are Tournaments?

Tournaments in Reinforce Tactics pit different bot types against each other in a round-robin format. Each matchup consists of multiple games where bots alternate playing as Player 1 and Player 2 to account for first-move advantage.

Tournament Format

- Round-Robin: Every bot plays against every other bot

- Fair Play: Equal games with each bot as Player 1 and Player 2

- Map: Typically played on the 6x6 beginner map for quick matches

- Results: Win/Loss/Draw records with win rate statistics

Bot Types

SimpleBot

A rule-based AI that follows a simple strategy:

- Purchases the most expensive units first (one per turn)

- Prioritizes capturing buildings and towers

- Attacks nearby enemy units

- Always included in tournaments

MediumBot

An improved rule-based AI with more sophisticated strategies:

- Maximizes unit purchases: Buys multiple units per turn instead of just one

- Coordinated attacks: Plans focus-fire to kill enemies in one turn

- Smart prioritization: Captures structures closest to its own HQ first

- Interrupts captures: Attacks enemies that are capturing structures

- Efficient combat: Evaluates damage-per-cost to make better attack decisions

- Included in tournaments by default alongside SimpleBot

AdvancedBot

A highly sophisticated AI extending MediumBot with enhanced strategic capabilities:

- Inherits from MediumBot: Builds upon MediumBot's proven coordinated attack and capture strategies

- Enhanced unit composition: Optimized purchasing across all 8 unit types (25% Warriors, 20% Archers, 15% Mages, 10% Knights, 10% Rogues, 8% Barbarians, 7% Clerics, 5% Sorcerers)

- Mountain positioning: Positions units on mountains before attacking for bonus damage

- Smart ranged combat: Prioritizes ranged attacks with Archers and Mages to minimize counter-attack damage

- Special ability usage: Effectively uses Mage Paralyze on high-value targets and Cleric Heal on damaged frontline units

- Map awareness: Analyzes HQ locations and defensive mountain positions on first turn

- Aggressive tactics: More aggressive attack prioritization and pursuit of enemies

- Included in tournaments by default alongside SimpleBot and MediumBot

LLM Bots

AI-powered by Large Language Models:

- OpenAIBot: Powered by GPT models

- ClaudeBot: Powered by Anthropic's Claude

- GeminiBot: Powered by Google's Gemini

- Uses natural language reasoning to make strategic decisions

Model Bots

Trained using Reinforcement Learning:

- Uses Stable-Baselines3 (PPO, A2C, or DQN)

- Trained through self-play and opponent challenges

- Learns optimal strategies through experience

Running Your Own Tournament

Want to run a tournament? It's easy! See the Tournament System guide for detailed instructions.

Quick start:

python3 scripts/tournament.py

Official Tournament Results

Below are the results from official tournaments run on the Reinforce Tactics platform. Results are organized by game version.

Version 0.1.0

Tournament #1 - December 19, 2025

Configuration:

- Games per matchup: 2 per side (16 total games per bot pair across all maps)

- Map pool mode: All maps played

- Starting ELO: 1500

Maps Used:

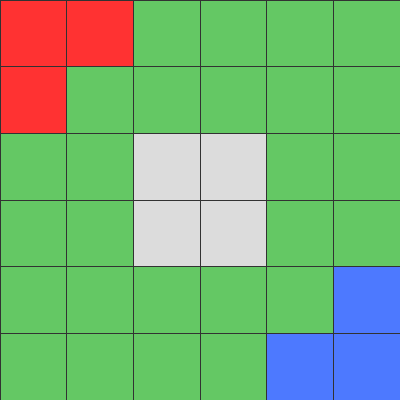

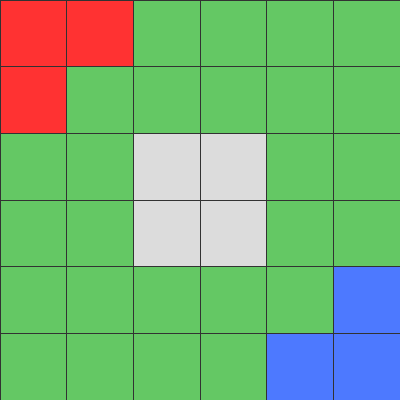

beginner.csv

6×6 • 2 Players

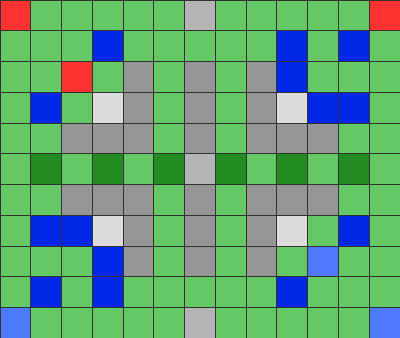

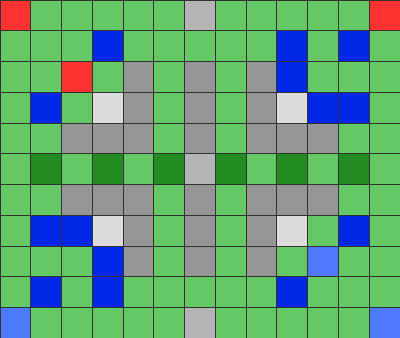

funnel_point.csv

13×11 • 2 Players

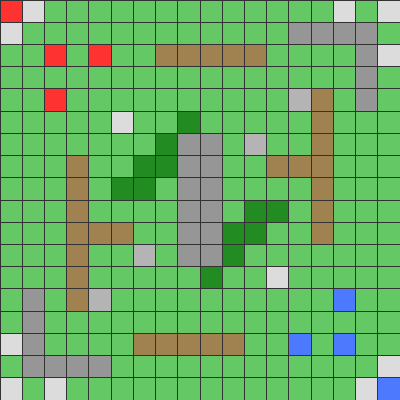

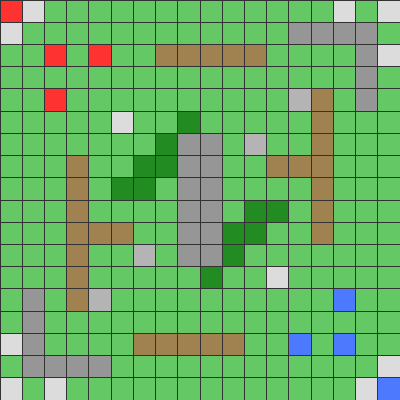

center_mountains.csv

18×18 • 2 Players

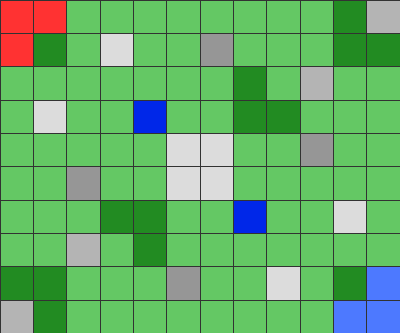

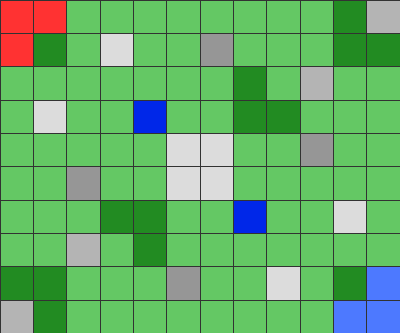

corner_points.csv

12×10 • 2 Players

Map Legend:

- 🟩 Green - Plains (walkable terrain)

- 🟦 Blue - Ocean/Water (impassable)

- ⬜ Light Gray - Mountains (impassable, provides attack bonus)

- 🌲 Dark Green - Forest (provides defense bonus)

- 🟫 Brown - Roads (faster movement)

- 🔴 Red - Player 1 structures (HQ, Buildings)

- 🔵 Blue Tint - Player 2 structures (HQ, Buildings)

- ⬜ White/Gray - Neutral Towers/Buildings

Participants:

- SimpleBot (rule-based)

- MediumBot (rule-based)

- AdvancedBot (rule-based)

- Claude Haiku 4.5 (claude-haiku-4-5-20251001)

Final Standings

| Rank | Bot Name | Wins | Losses | Draws | Total Games | Win Rate | ELO | ELO Change |

|---|---|---|---|---|---|---|---|---|

| 🥇 1 | AdvancedBot | 36 | 2 | 10 | 48 | 75.0% | 1693 | +193 |

| 🥈 2 | MediumBot | 19 | 10 | 19 | 48 | 39.6% | 1575 | +75 |

| 🥉 3 | SimpleBot | 1 | 20 | 27 | 48 | 2.1% | 1405 | -95 |

| 4 | Claude Haiku 4.5 | 2 | 26 | 20 | 48 | 4.2% | 1327 | -173 |

Head-to-Head Results

| Matchup | Wins | Losses | Draws | Notes |

|---|---|---|---|---|

| SimpleBot vs MediumBot | 0 | 6 | 10 | MediumBot dominates |

| SimpleBot vs AdvancedBot | 0 | 12 | 4 | Complete sweep by AdvancedBot |

| SimpleBot vs Claude Haiku 4.5 | 1 | 2 | 13 | Mostly draws, slight LLM edge |

| MediumBot vs AdvancedBot | 2 | 10 | 4 | AdvancedBot clearly superior |

| MediumBot vs Claude Haiku 4.5 | 11 | 0 | 5 | MediumBot perfect record |

| AdvancedBot vs Claude Haiku 4.5 | 14 | 0 | 2 | Complete domination |

Per-Map Performance

beginner.csv (6×6)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 12 | 0 | 0 |

| MediumBot | 4 | 4 | 4 |

| Claude Haiku 4.5 | 2 | 7 | 3 |

| SimpleBot | 1 | 8 | 3 |

funnel_point.csv (13×11)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| MediumBot | 8 | 0 | 4 |

| AdvancedBot | 6 | 2 | 4 |

| SimpleBot | 0 | 4 | 8 |

| Claude Haiku 4.5 | 0 | 8 | 4 |

center_mountains.csv (18×18)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 6 | 0 | 6 |

| MediumBot | 1 | 2 | 9 |

| SimpleBot | 0 | 2 | 10 |

| Claude Haiku 4.5 | 0 | 3 | 9 |

corner_points.csv (12×10)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 12 | 0 | 0 |

| MediumBot | 6 | 4 | 2 |

| SimpleBot | 0 | 6 | 6 |

| Claude Haiku 4.5 | 0 | 8 | 4 |

Analysis

Key Findings:

-

AdvancedBot dominance: The AdvancedBot performed exceptionally well, achieving a 75% win rate and gaining +193 ELO. Its sophisticated strategies (mountain positioning, ranged combat, special abilities) proved highly effective.

-

MediumBot solid performance: With a 39.6% win rate and +75 ELO gain, MediumBot showed the value of coordinated attacks and maximized unit production over SimpleBot's basic approach.

-

LLM bot struggles: Claude Haiku placed last with only a 4.2% win rate (-173 ELO). This suggests that current LLM reasoning may not be optimized for the tactical decision-making required in this game format.

-

High draw rates: Many matches ended in draws, particularly on center_mountains.csv (defensive terrain) and between lower-performing bots. This indicates the game's strategic depth and defensive viability.

-

Map influence: AdvancedBot dominated on beginner.csv and corner_points.csv (100% win rate), while MediumBot actually outperformed on funnel_point.csv.

Notable Observations:

- AdvancedBot never lost on 3 of 4 maps

- The hierarchy is clear: AdvancedBot > MediumBot > SimpleBot/Claude Haiku

- Center mountains map produces the most draws (defensive terrain advantage)

Version 0.1.1

Tournament #1 - December 29, 2025

Configuration:

- Games per matchup: 8 games per bot pair (1 per side per map)

- Map pool mode: All maps played

- Starting ELO: 1500

- Bots: 8 participants (3 rule-based, 5 LLM-powered)

Maps Used:

beginner.csv

6×6 • 2 Players

funnel_point.csv

13×11 • 2 Players

center_mountains.csv

18×18 • 2 Players

corner_points.csv

12×10 • 2 Players

Participants:

- SimpleBot (rule-based)

- MediumBot (rule-based)

- AdvancedBot (rule-based)

- Claude Haiku 4.5 (claude-haiku-4-5-20251001)

- Claude Sonnet 4.5 (claude-sonnet-4-5-20250929)

- ChatGPT 5 Mini (gpt-5-mini-2025-08-07)

- Gemini 2.0 Flash (gemini-2.0-flash)

- Gemini 3.0 Flash (gemini-3-flash-preview)

Final Standings

| Rank | Bot Name | Wins | Losses | Draws | Total Games | Win Rate | ELO | ELO Change |

|---|---|---|---|---|---|---|---|---|

| 🥇 1 | AdvancedBot | 34 | 1 | 21 | 56 | 60.7% | 1702 | +202 |

| 🥈 2 | Gemini 3.0 Flash | 33 | 4 | 19 | 56 | 58.9% | 1646 | +146 |

| 🥉 3 | MediumBot | 26 | 8 | 22 | 56 | 46.4% | 1618 | +118 |

| 4 | Claude Sonnet 4.5 | 19 | 13 | 24 | 56 | 33.9% | 1508 | +8 |

| 5 | SimpleBot | 1 | 20 | 35 | 56 | 1.8% | 1410 | -90 |

| 6 | Claude Haiku 4.5 | 3 | 22 | 31 | 56 | 5.4% | 1389 | -111 |

| 7 | ChatGPT 5 Mini | 2 | 22 | 32 | 56 | 3.6% | 1386 | -114 |

| 8 | Gemini 2.0 Flash | 0 | 28 | 28 | 56 | 0.0% | 1341 | -159 |

Head-to-Head Results

| Matchup | Wins | Losses | Draws | Notes |

|---|---|---|---|---|

| AdvancedBot vs Gemini 3.0 Flash | 4 | 0 | 4 | AdvancedBot undefeated |

| AdvancedBot vs MediumBot | 5 | 1 | 2 | AdvancedBot dominant |

| AdvancedBot vs Claude Sonnet 4.5 | 5 | 0 | 3 | Complete domination |

| Gemini 3.0 Flash vs MediumBot | 3 | 0 | 5 | Gemini edges out |

| Gemini 3.0 Flash vs Claude Sonnet 4.5 | 4 | 0 | 4 | Gemini superior |

| MediumBot vs Claude Sonnet 4.5 | 4 | 0 | 4 | MediumBot perfect |

| Claude Sonnet 4.5 vs Claude Haiku 4.5 | 5 | 0 | 3 | Sonnet dominates Haiku |

| Claude Sonnet 4.5 vs ChatGPT 5 Mini | 4 | 0 | 4 | Sonnet edges GPT |

| Claude Sonnet 4.5 vs Gemini 2.0 Flash | 6 | 0 | 2 | Sonnet sweeps |

| Claude Haiku 4.5 vs ChatGPT 5 Mini | 0 | 0 | 8 | Complete stalemate |

| Gemini 2.0 Flash vs SimpleBot | 0 | 0 | 8 | All draws |

Per-Map Performance

beginner.csv (6×6)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 14 | 0 | 0 |

| Gemini 3.0 Flash | 12 | 2 | 0 |

| Claude Sonnet 4.5 | 7 | 5 | 2 |

| MediumBot | 6 | 4 | 4 |

| ChatGPT 5 Mini | 2 | 8 | 4 |

| Claude Haiku 4.5 | 2 | 8 | 4 |

| SimpleBot | 1 | 8 | 5 |

| Gemini 2.0 Flash | 0 | 9 | 5 |

funnel_point.csv (13×11)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| Gemini 3.0 Flash | 11 | 1 | 2 |

| MediumBot | 9 | 1 | 4 |

| Claude Sonnet 4.5 | 8 | 5 | 1 |

| AdvancedBot | 4 | 1 | 9 |

| Claude Haiku 4.5 | 1 | 6 | 7 |

| SimpleBot | 0 | 6 | 8 |

| ChatGPT 5 Mini | 0 | 6 | 8 |

| Gemini 2.0 Flash | 0 | 7 | 7 |

center_mountains.csv (18×18)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 4 | 0 | 10 |

| Claude Sonnet 4.5 | 3 | 0 | 11 |

| Gemini 3.0 Flash | 2 | 0 | 12 |

| MediumBot | 2 | 1 | 11 |

| SimpleBot | 0 | 1 | 13 |

| ChatGPT 5 Mini | 0 | 2 | 12 |

| Claude Haiku 4.5 | 0 | 2 | 12 |

| Gemini 2.0 Flash | 0 | 5 | 9 |

corner_points.csv (12×10)

| Bot | Wins | Losses | Draws |

|---|---|---|---|

| AdvancedBot | 12 | 0 | 2 |

| MediumBot | 9 | 2 | 3 |

| Gemini 3.0 Flash | 8 | 1 | 5 |

| Claude Sonnet 4.5 | 1 | 3 | 10 |

| SimpleBot | 0 | 5 | 9 |

| ChatGPT 5 Mini | 0 | 6 | 8 |

| Claude Haiku 4.5 | 0 | 6 | 8 |

| Gemini 2.0 Flash | 0 | 7 | 7 |

LLM Token Usage

| Model | Input Tokens | Output Tokens | Total Tokens | Games |

|---|---|---|---|---|

| Gemini 3.0 Flash | 24,174,240 | 1,295,618 | 25,469,858 | 58 |

| ChatGPT 5 Mini | 13,899,718 | 4,508,521 | 18,408,239 | 57 |

| Claude Sonnet 4.5 | 16,585,568 | 695,199 | 17,280,767 | 56 |

| Claude Haiku 4.5 | 16,116,414 | 552,455 | 16,668,869 | 56 |

| Gemini 2.0 Flash | 12,057,436 | 402,555 | 12,459,991 | 56 |

Analysis

Key Findings:

-

AdvancedBot maintains dominance: With a 60.7% win rate and +202 ELO, AdvancedBot proves its sophisticated strategies remain effective even against a larger, more diverse field of competitors.

-

Gemini 3.0 Flash emerges as top LLM: Gemini 3.0 Flash achieved an impressive 58.9% win rate (+146 ELO), becoming the first LLM bot to finish in the top 3. It went undefeated against all other LLM bots.

-

Claude Sonnet 4.5 shows promise: With a 33.9% win rate, Claude Sonnet significantly outperformed other Claude and OpenAI models, demonstrating improved tactical reasoning over Haiku.

-

LLM tier separation: Clear performance tiers emerged among LLMs: Gemini 3.0 Flash (top tier), Claude Sonnet 4.5 (mid tier), and Claude Haiku/ChatGPT 5 Mini/Gemini 2.0 Flash (bottom tier).

-

Map-specific patterns:

- beginner.csv: Decisive outcomes with few draws - fast-paced combat favors aggressive bots

- center_mountains.csv: Extremely high draw rate (75%+ for most bots) - defensive terrain creates stalemates

- funnel_point.csv: Gemini 3.0 Flash and MediumBot excelled with 11 and 9 wins respectively

Notable Observations:

- AdvancedBot's only loss came from MediumBot (1 game out of 56)

- Gemini 2.0 Flash won 0 games across all matchups - the only bot with no wins

- Claude Haiku 4.5 vs ChatGPT 5 Mini resulted in 8 draws - complete stalemate

- Token efficiency: Claude models used ~300K tokens per game vs ChatGPT's ~323K per game

How to Contribute Results

Have you run a tournament? Share your results with the community!

- Run a tournament using

scripts/tournament.py - Save the results (CSV/JSON files)

- Submit a pull request with your results

- Include replay files for verification

Insights and Analysis

Strategy Patterns

As tournaments are completed, we'll analyze:

- Opening strategies and unit compositions

- Economic vs. military balance

- Successful tactical patterns

- Common mistakes and pitfalls

Bot Strengths and Weaknesses

Each bot type has different characteristics:

- SimpleBot: Predictable but consistent; makes basic strategic errors

- MediumBot: More strategic than SimpleBot; uses coordinated tactics and better unit purchasing

- AdvancedBot: Extends MediumBot with enhanced unit composition, mountain positioning, ranged combat prioritization, and special ability usage

- LLM Bots: Creative but sometimes unpredictable

- Model Bots: Optimized but may overfit to training conditions

Map-Specific Performance

Different maps favor different strategies:

- Small maps (6x6): Fast, aggressive play

- Medium maps (10x10): Balanced economic/military

- Large maps (24x24+): Long-term strategy and positioning

Tournament Archive

All tournament replays are saved and can be watched using the game's replay system:

# Load a replay file

python3 main.py --mode play --replay path/to/replay.json

Replay files include:

- Complete action history

- Game metadata (bots, map, duration)

- Can be analyzed programmatically

Future Tournaments

Upcoming tournament ideas:

- Map Variety: Tournaments on different map sizes and layouts

- Specialized Competitions: Economy-focused, combat-focused, speed-run

- Evolution: Tournament of evolved/improved model versions

- Human vs. Bot: Special exhibitions with human players

Resources

- Tournament System Guide - Technical documentation

- Implementation Status - Current features

- Getting Started - Learn how to play

Last updated: December 2025

Tournament results will be updated as official competitions are completed.